by Maria Sofou

Innovator Guy Ben-Ary has developed something pretty cool: an interacive synthesizer using his own stem cells!

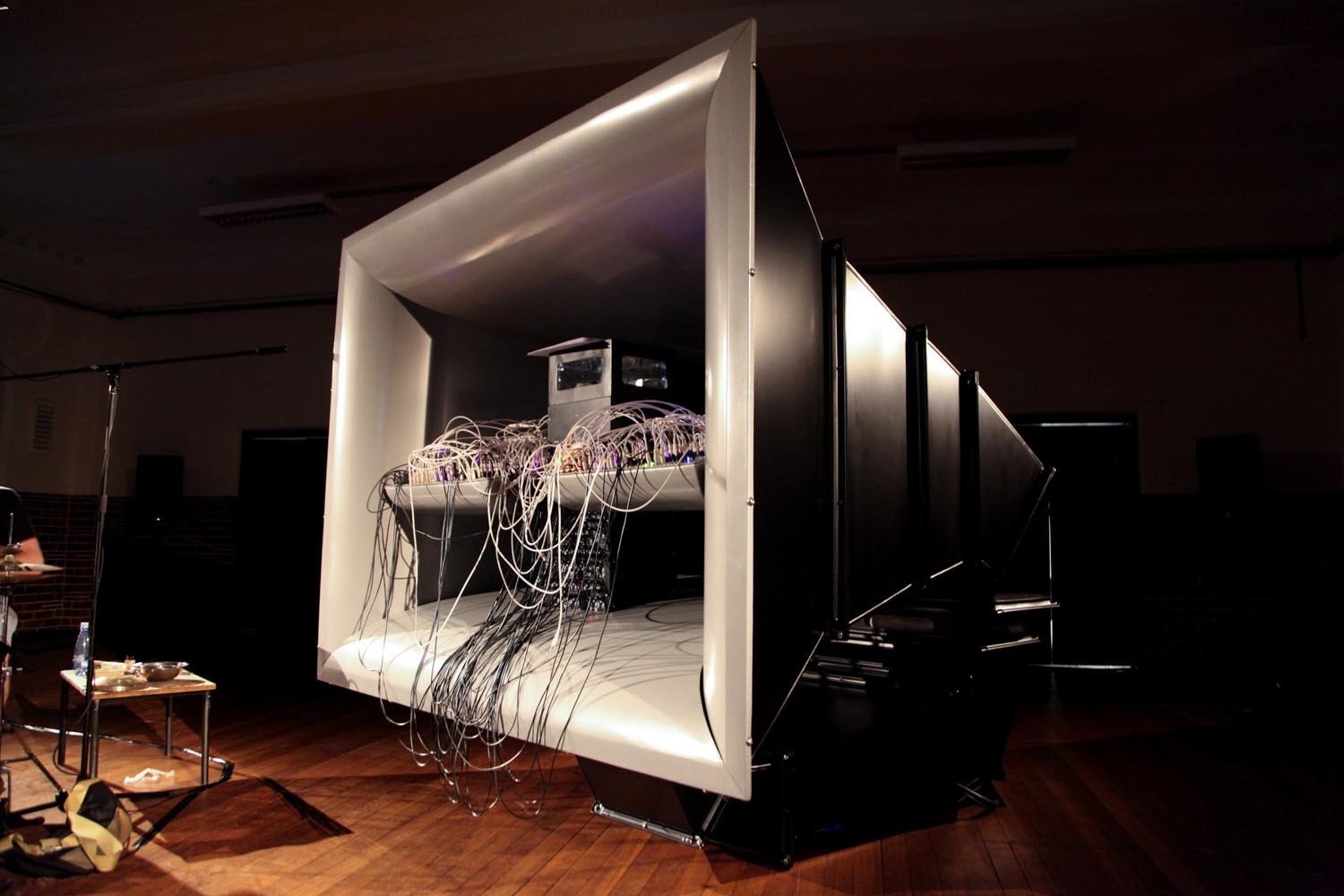

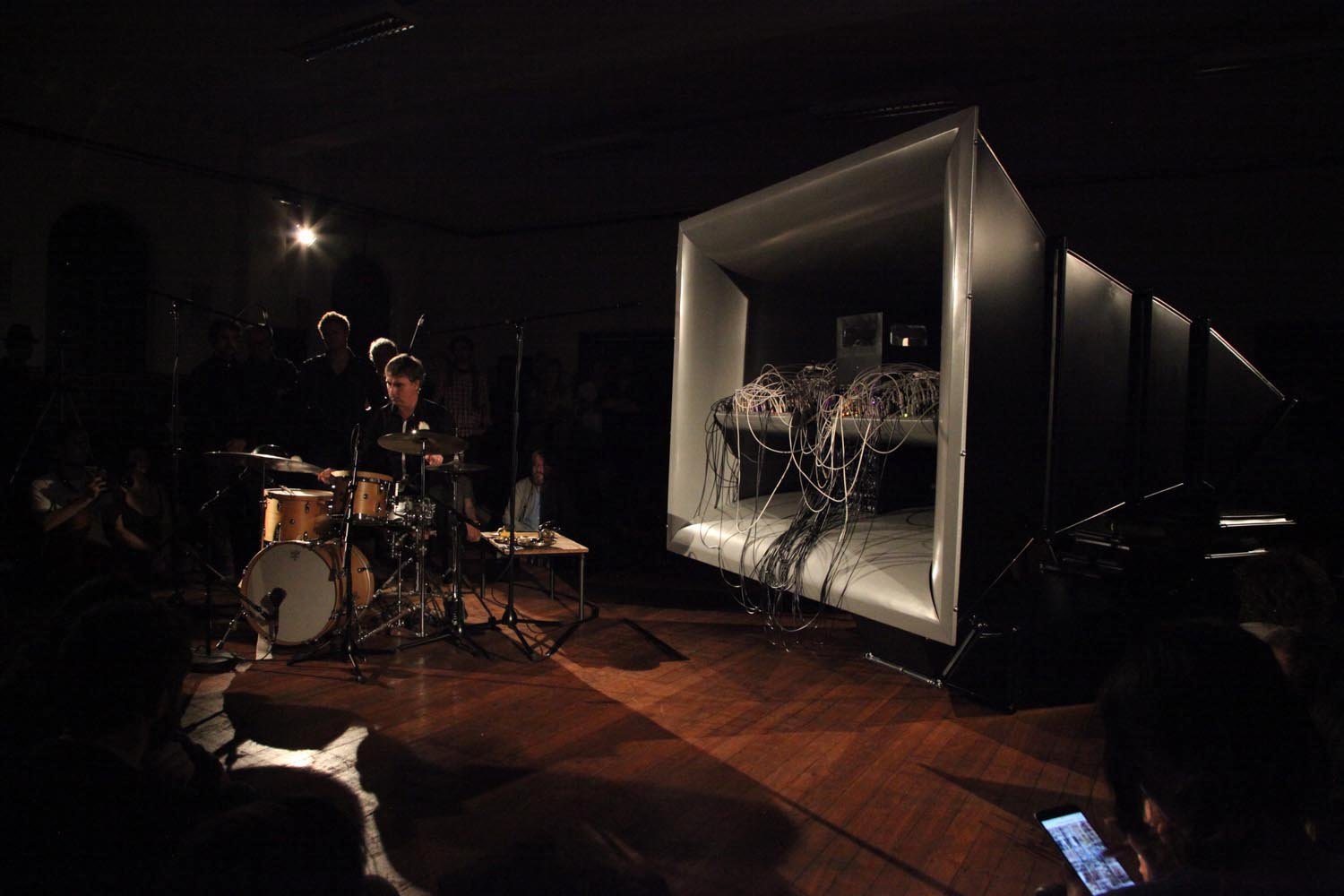

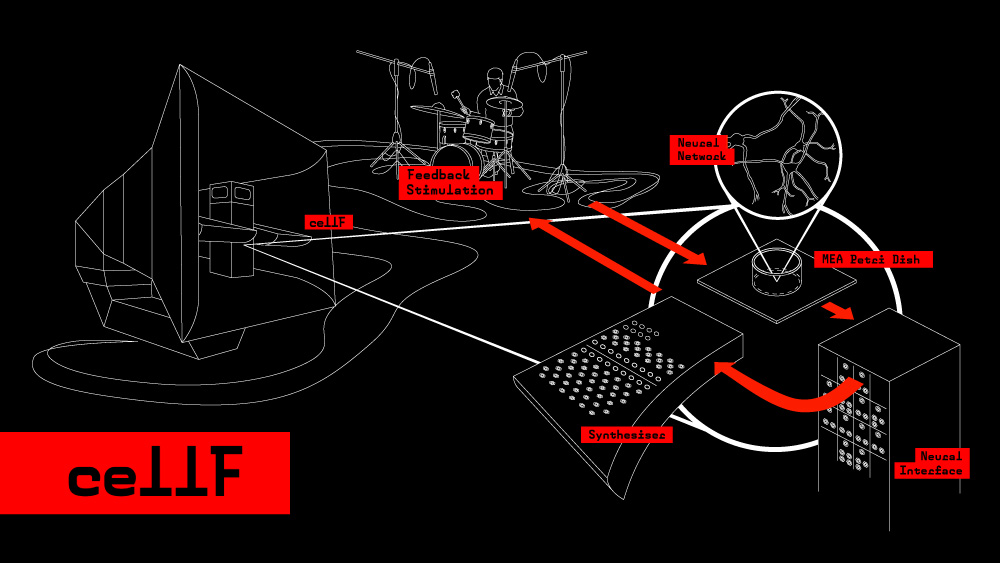

Ben-Ary developed cellF in order to explore in what ways an artwork can use biological and robotic technologies, raising questions about the materiality of the human body at the same time. The creator divided his project in two parts: he firstly used Induced Pluripotent Stem Cell technology (iPS) to reprogram his own cells (taken from his arm) and transformed them into a functional neural network, and then developed a robotic body to interface to this “external brain” so that they work in synergy. In other words, when noise is delivered to cellF, the cells respond using the synthesizer and “perform” live.

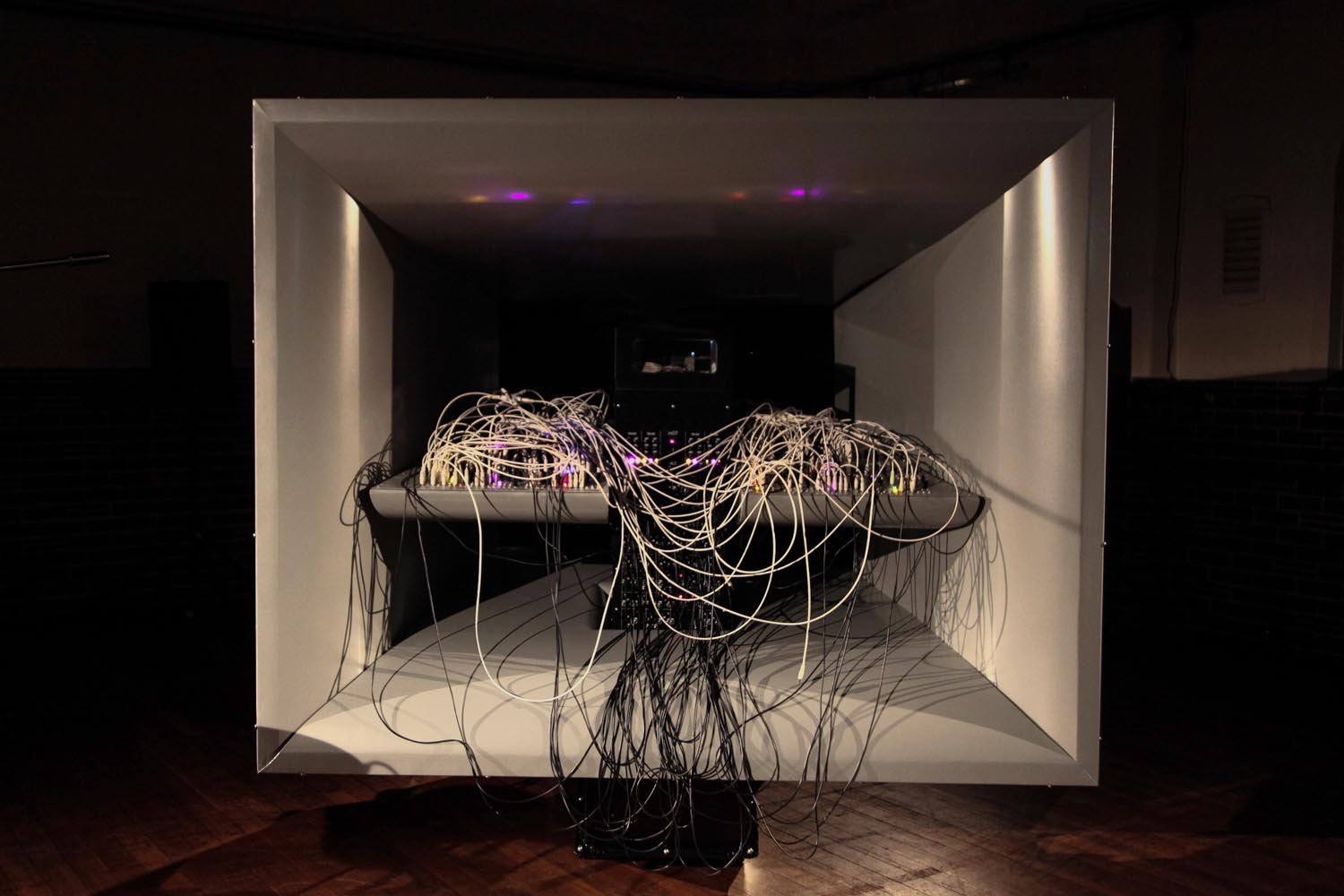

“In cellF I embody my external ‘brain’ with a sound-producing ‘body’ comprised of an array of analogue modular synthesizers. The aesthetics of the synthesizer, which are similar to that of an electrophysiological laboratory, fits my vision perfectly. Furthermore, there is a surprising similarity in the way neural networks and synthesizers work in that in both voltages are passed through the components to produce data or sound. There is also a practical consideration, the neural networks produce large and extremely complex data sets, and by its very nature, the analogue synthesizer is well suited to reflecting the complexity and quantity of information via sound. The synthesizers are embedded into a sculptural object that will also house a mini bio-lab that hosts my external brain. Essentially cellF can be seen as a cybernetic musician/composer. The artwork is performative, where human musicians are invited to play with cellF in special one-off shows. The human-made music is fed to the neurons as stimulation, and the neurons respond by controlling the analogue synthesizers, and together they perform live, reflexive and improvised sound pieces or “jam sessions” that are not entirely human,” Guy Ben-Ary explains.

Inspiring or intimidating?

(h/t: beautifuldecay)